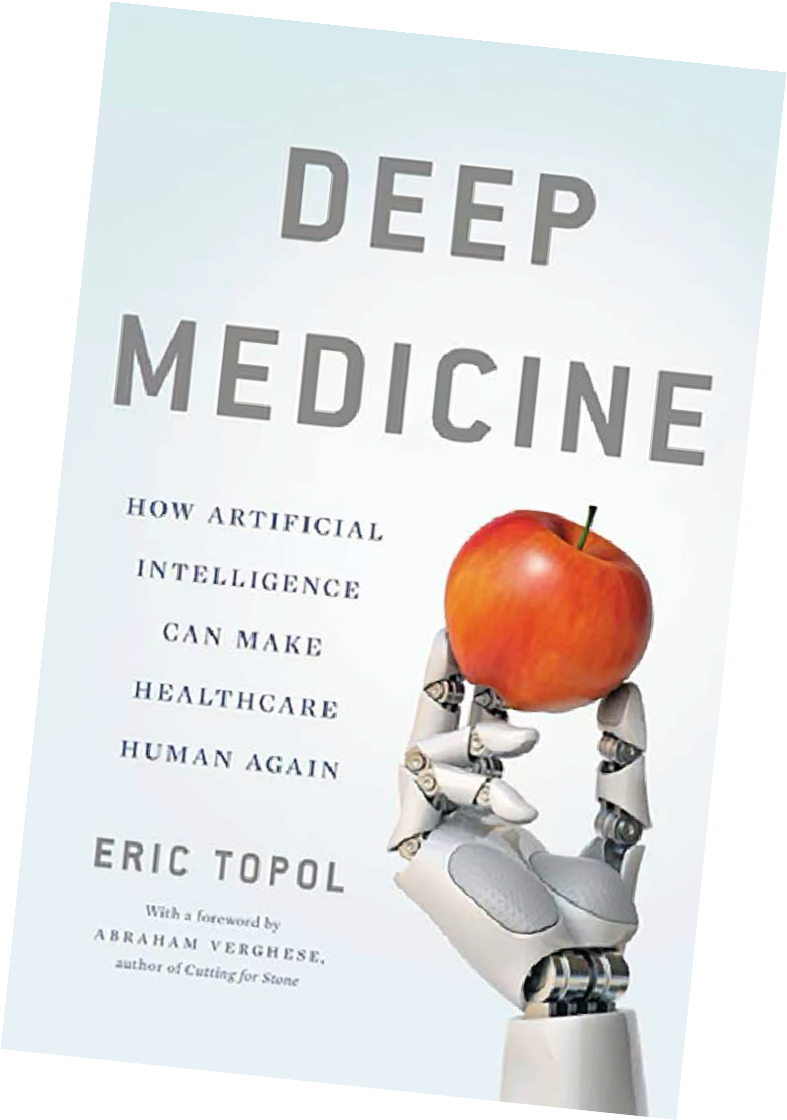

Few topics have sparked such extensive and controversial debate as ChatGPT and other emerging models of artificial intelligence (AI). As this technology advances at lightning speed, some worry that machines will make medicine robotic and impersonal. But if used intentionally, physician-scientist Dr. Eric Topol argues, machines may actually make medicine more human.

In his prescient book Deep Medicine, Dr. Topol outlined how AI may empower clinicians and revolutionize patient care. Four years on from the book’s publication, we catch up with Dr. Topol on the latest progress in harnessing AI for maximal benefit as well as the major barriers to moving this technology forward.

A conversation with Dr. Eric Topol

Medicine faces a care crisis. Over the past few decades, health providers have become increasingly hampered by administrative duties, patient data and complex insurance policies that have affected the quality and time spent with patients. Physicians have become “data clerks,” Dr. Eric Topol tells CuraLink—a shift that has led to widespread dissatisfaction on both sides of clinic doors. Crunched for time, many providers feel they are delivering suboptimal care while patients often feel unheard, dismissed or misunderstood.

According to Dr. Topol, it’s time to lean on machines. If used strategically and ethically, artificial intelligence could streamline the massive data flood in medicine and reduce the administrative burden in health care.

In issue 16 of CuraLink, Dr. Eric Topol shares how AI could one day induce a widespread “keyboard liberation” and help restore the patient-doctor relationship by giving clinicians and patients the ultimate gift: time.

You are a leading clinician and researcher trying to predict the future of medicine. Over the last few decades, why have you zeroed in on communicating about the use of big data, artificial intelligence (AI) and precision medicine?

Eric Topol, MD, Founder and Director, Scripps Research Translational Institute; Executive Vice President, Professor, Molecular Medicine, and Gary and Mary West Endowed Chair of Innovative Medicine, Scripps Research

These topics make me tick. It is what I think and read about all the time and what we work on in my research. Throughout my career as a practicing physician, I’ve known that things are not optimal. So how can we fix this? How can we make it much better? How can we improve patient care and improve the accuracy of diagnoses, treatments and prevention strategies? I’ve known that we can do better. This is my path toward how we might get there.

In Deep Medicine, you write that medicine is in a state of crisis—that the field has failed patients by not treating them as individuals. Four years on from publication, do you still agree with that sentiment, and can you share your view on the healthcare system today?

It’s been 4 years since Deep Medicine was published and, unfortunately, things haven’t improved. Currently, medicine is fast and shallow. We do not have enough time for patients. It won’t happen by accident, or naturally, but technology may give us that missing time if we fight for it.

There is a global crisis among clinicians. Most feel like they are missing out on their primary goal in medicine, which is to care for patients. Patients want to know they’re being looked after—that there’s true empathy, trust and presence and that clinicians have their backs.

Over the last 40 years, especially in the last 10 to 15 years, there has been a steady decay of this type of care because of things like electronic health records and expectations of seeing patients in very minimal time. These concerning trends haven’t improved yet, but I do remain optimistic that we will get to a much better place in the future.

Did you have a particular experience that compelled you to explore AI’s potential utility in medicine? What made you confident that machine learning and AI should be cautiously embraced, not feared or rejected?

The need for AI only became evident when we began to gather so much data on patients.

“As humans, we can’t deal with the high level of information. We need to lean on machines.”

In the old days, we didn’t have much information per patient. There was no genome. There weren’t fancy high- resolution MRI scans or PET-CT scans, labs across multiple portals or environmental and wearable sensors.

The number of sources and nodes of data supply for each person is just extraordinary and is beyond human capability. We need machines to rescue us in this situation. There’s no other choice.

AI was in a long hibernation until we reached this deep learning phase where AI could take in lots of data and help interpret it, especially for images. This technology has given machines eyes that are complementary and far better, in many respects, than even expert human eyes.

As we’ve seen with ChatGPT and other language models, artificial intelligence often sparks confusion and controversy. What should everyday people understand about AI’s evolution and its potential uses?

Up until now, deep learning was mainly used for images. It wasn’t great for speech and text. Recently, large language models like ChatGPT that have captured a lot of imagination and consternation have changed that. ChatGPT got its start a few years ago, but it is hitting its stride now. This type of multimodal AI can work with text and integrate it with multiple sources of data. You can’t do that without self-supervised learning, which is part of ChatGPT and these other language models. We needed this type of platform, and it’s going to get better.

Up until now, deep learning was mainly used for images. It wasn’t great for speech and text. Recently, large language models like ChatGPT that have captured a lot of imagination and consternation have changed that. ChatGPT got its start a few years ago, but it is hitting its stride now. This type of multimodal AI can work with text and integrate it with multiple sources of data. You can’t do that without self-supervised learning, which is part of ChatGPT and these other language models. We needed this type of platform, and it’s going to get better.

If you want to prevent an illness that you are genetically predisposed to or want to better manage a chronic condition, multimodal AI can help. Wouldn’t it be great if your data were being constantly reviewed with your consent and you were coached by a virtual health assistant with access to a clinician when you needed it?

These are exciting opportunities, but it’s still very early. This technology is a double-edged sword. It can help quite a bit, but as we’ve already seen, it can be prone to errors, hallucinations and overconfidence. But whatever critiques you see today will be improved. There are already GPT-4, Sparrow and many other models emerging. Once we get this right, through training and validation, it will help get us where we need to go.

Rather than make medicine more formulaic or robotic, you argue that artificial intelligence can make medicine more human. How might AI have this effect?

That’s the premise of Deep Medicine, and I still believe it is possible. Already, we’re seeing the ability to make many doctorless screening diagnoses—whether it’s a skin lesion rash, a urinary tract infection or a child’s ear infection. The list keeps growing. These are common reasons why people go see a doctor. They may not be serious or life-threatening, but they’re important. Appointments for these sorts of problems are going to ultimately be greatly reduced.

As I touched on, virtual coaching is also going to increase. It has already begun with managing high blood pressure, diabetes and depression, but it’s going to get much better. On the patient side, you will have much more support using AI, which then reduces the need to see a healthcare provider. The provider can then segment their time for more serious matters like making a new complex diagnosis.

“We need physicians to stand up for patients.”

One of the biggest problems in the United States is that health care is basically managed by non-clinician administrators. The creep of growth of these administrators accounts for much of the workforce’s expansion. Healthcare systems need to prioritize the patient-doctor relationship. We’ve seen a great attrition of that relationship. In the 1970s and 1980s, this was a precious, trusted and intimate bond. Now, we don’t see that very often.

At speaking engagements, I often ask people: “How many of you have been dismissed by your doctor?” Almost everyone raises their hand. That wasn’t characteristic decades ago. Keyboards are a big part of that—working on computers rather than attending to the patient. Less time generally means less time to conduct a good physical exam. We don’t listen to patients much because we interrupt them within seconds. So there are a lot of factors here that can be improved on both the clinician and patient sides if we stand up for our patients. But that’s a big if. It won’t happen naturally. It’s going to take work.

How can regulators keep pace with the rapid development of artificial intelligence while protecting the public from potential harm?

With AI in health care, there is little transparency and a lack of compelling evidence. The medical community needs strong evidence that this stuff works and can really make a difference. We don’t have much of that in the field. Meanwhile, the FDA accepts algorithms that are done with retrospective data, which isn’t a great approach. A lot of this data is proprietary or has never been published, so it’s not made available to the medical community. There is also this lower bar for clearance called 510(k). That doesn’t help either.

A lot of doctors who are threatened by this technological era can’t even review these companies’ data or it isn’t compelling to them in the first place. There aren’t many great randomized or prospective trials that prove unquestionably that this should be the standard of care. We can’t move on to full adoption until we all share the mindset that the field of AI must be transparent, high-quality and based on rigorous studies. There are very few examples of that so far.

How might regulators encourage greater rigor and transparency?

The U.S. Food and Drug Administration (FDA) could tighten things up. Currently, they are letting a lot of algorithms go through with data that’s opaque and low-quality. It’s not helpful for the field to have a low threshold for these retrospective, unpublished studies.

Companies say: “The data is proprietary. It’s our secret sauce.” But at the very least, the FDA should demand to publicize the data behind an algorithm, even if a company wants to keep it proprietary. But they don’t. Regulators don’t have a lot of teeth, in that respect, and I’d love to see that happen.

“The beauty of AI neural networks is that they have unlimited hunger for data.”

AI is being treated in the classic way, but these are not static models like drugs or medical devices. These are dynamic, autodidactic models that just get better and better with more information input. The FDA doesn’t know how to deal with this kind of technology. We’re currently losing the power of these networks because the FDA freezes the algorithm at the time of its approval or clearance, which slows down the field. If you have any other iteration, you must bring the updated version under a whole new application.

The algorithm could improve greatly over time, but it doesn’t. If everything was transparent, and there were periodic updates about how an algorithm was performing, we could figure out how to better regulate it. My colleagues and I converse with FDA regulators about our concerns, and they recognize these issues. So there is a lot of hope that this will eventually get on track.

What are the major risks around AI and machine learning in medicine that people should keep in mind?

There are plenty of risks. That’s why we must get this right. One of the risks that’s very well-demonstrated is bias. It’s not necessarily a bias of the AI, per se. It’s the bias of our culture. There are many examples of this: Data inputs may be based on our embedded biases, or an algorithm model doesn’t interrogate data for possible biases. So that has to be attended to. If you have biased inputs for something like skin lesion detection, where there aren’t many people of color represented, obviously, it can only be extrapolated to white people. The biases can be much worse than that. Algorithms can be misused and cause all sorts of problems in health care.

Another risk that’s brought up a lot is explainability. If you can’t explain exactly how the algorithm is working, then that’s a problem. I’m not so sure that’s a major issue if it works exceptionally well and has compelling data. In medicine, there are plenty of things we can’t explain like anesthetics or various treatments. We have no idea how they work, but they work. So if the algorithm works exceptionally well and it’s incontrovertible, it could be okay. We’re also using AI to deconstruct the models themselves and determine the features accounting for their capacity.

Another major concern is that AI will make inequities worse. All the things we’ve discussed could only be accessible to the wealthy or privileged. But what’s exciting is seeing how AI is being rolled out in low- and middle-income countries to a larger degree, in some cases, than in wealthy countries. My favorite example is the smartphone ultrasound. Someone can take a probe and put it on their chest. Even if you don’t know how to do an echocardiogram, the AI will walk you through it. The resulting images are extraordinary. You see everything, and you can share the images immediately with the patient. Smartphone ultrasounds are taking off in the hinterlands of Africa, as well as in countries like India. This shows us that AI doesn’t have to worsen inequities. It can improve them.

There are lots of other potential limitations. If an AI algorithm develops a glitch, it can quickly harm a lot of people. Any software is subject to adversarial attacks such as people deliberately trying to use malware to take it down.

When you move in on AI, you realize that it has tremendous power but there are lots of concerns and caveats that have to be reckoned with. We can’t let our guard down. Once we accept an algorithm and implement it, then the question is: Is it under surveillance to pick up anything that may go off track?

How might AI and machine learning influence the overall healthcare burden in the United States and reduce the number of unnecessary surgical procedures or even misdiagnoses that we see?

With AI, accuracy can go way up. We already have a lot of randomized trials on colonoscopies, which show superior pickup of polyps. Clinicians miss a lot of things because we’re so busy and tired. Our eyes are not as good as the machine-trained eyes. But as a patient, why would you want to go through that terrible procedure and have a potential cancerous polyp missed?

I recently consulted with the National Health Service in the UK, and the charge was: What is AI going to do to health care generally and the workforce in particular? I predict that by using machines to support clinicians, we may not have to grow the workforce as much. We could get more autonomy and efficiency for patients.

Keyboard liberation is one of the biggest benefits that could happen to free up the time, disenchantment and, frankly, depression among clinicians. We didn’t do all of the work to become a doctor or a nurse to work as a data clerk.

A lot of dreaded, menial work revolves around dealing with pre-authorizations for patients to get them a particular medication or test that the insurance company doesn’t want to provide. We can use ChatGPT for these back-office operations. Synthetic notes from large language models, with oversight, are also going to replace the way the notes are made now. During a doctor’s visit, details on tests or prescriptions or the next patient visit, for example, can be done beautifully through voice without typing on a keyboard.

AI could cause a revamping that will change the whole landscape of the workforce and the providers’ day-to- day jobs.

How might this technology streamline drug development processes or things like protein synthesis?

This has been a hot topic. One of the medicines, baricitinib, that had life-saving capability in the pandemic was discovered through AI.

There are many ways that AI is facilitating drug discovery. We already have some evidence that we can use AI for data mining and creating new molecular structures much faster. We’ve seen some new molecular entities entering clinical trials very quickly. One day, we might not have to use animal models. We could predict toxicity through AI and run synthetic trials.

It’s an endless sea of ideas. We have seen some early wins. We haven’t yet seen a de novo drug approved that was designed with AI support, but we will. So I do think this is an exciting area for the future. We’ll see a lot more of it. But like everything else that we’ve talked about, it is still in the early stages.

What are your thoughts on the rise of robotic, AI-driven medical assistants?

There’s a whole spectrum of avatars and robots in the space, and we’re increasingly seeing humanoid features. For mental health, which is the biggest burden that’s unmet with our healthcare workforce, data shows people are more comfortable talking to and giving away their deepest secrets to an avatar or a robot than they are to a human. So we should take advantage of that.

There are studies on elderly people in Japan who feel great comfort with these robots. They keep them company. It may not be perfect, but it helps with issues of loneliness. But we’ve also seen deep fakes or so-called generative adversarial networks (GANs). So it could be good and bad as we go forward.

Are there any other major applications that we haven’t discussed that you would want the public to be aware of?

The applications are endless. One of the chapters in my book was called “Deep Diet.” It’s the idea that each of us could get specific nutritional recommendations based on our biology and physiology.

This whole idea that we’re all the same is completely wrong. We’ve seen great evidence behind the concept that each of us are truly unique, even identical twins. There is no such thing as a healthy diet that works for all people. It’s very different from one person to the next. Unraveling that realm in the years ahead is another exciting frontier.

What is your ultimate vision for a future where clinicians and AI can work synergistically? Should the public have hope that this technology is going to be truly revolutionary, or is it too early to say?

I wrote Deep Medicine to help people embrace the idea and be excited about it. What has happened since then has only added to that, because now we have a whole new large language model phase of AI, which is going to make it all go faster.

It works like Moore’s Law where chips would double their capacity every 18 months or so. We’re seeing AI double its parameters every few months. This is now going into high gear.

The main point here is to keep our minds open to the potential of a rescue for improving medicine, taking it back to an era where it was remarkably effective. We could restore the patient-doctor relationship but even with more accuracy and illness prevention, which is a fantasy now but someday may be actualized. These are things that we should be excited about and try to press on. Someday we’ll get there.

This interview has been edited for length and clarity.

If you have any questions or feedback, please contact: curalink@thecurafoundation.com

Newsletter created by health and science reporter and consulting producer for the Cura Foundation, Ali Pattillo, consulting editor, Catherine Tone and associate director at the Cura Foundation, Svetlana Izrailova.